The Pursuit Toward Higher-Stakes Military AI Deployment and Its Commercial Impact Beyond the Battlefield

No serious investor or technologist expects the next benchmark-breaking AI model to emerge from the Pentagon. With startups and tech giants releasing groundbreaking AI models every month, the march toward higher-performing AI lies squarely in the private sector. Benchmark-breaking models will keep coming from industry; however, the next step-change in value will come from proving reliability under real-world pressure, with defense as the hardest test bed.

Unlike the military, these firms have the luxury of abundant data and operate in relatively low-risk environments, conditions that reward the straightforward scaling-laws playbook until systems leave the lab. More data plus more compute equals smarter AI. For Silicon Valley, a misstep from ChatGPT or another LLM is irritating but harmless. Yet as AI capabilities accelerate from chatbots to higher-stakes tasks, the tolerance for error approaches zero. The rising benefits of AI coincide with an uncomfortable truth: while AI models are doubling in capability every few months, our mechanisms for understanding, predicting, and controlling their behavior are struggling to keep pace.

This disconnect has created what Gartner identifies as a systematic neglect of AI trust, risk, and security management–technologies that organizations have relegated to afterthought status for years. The Future of Life Institute's AI Safety Index underscored this urgent gap in July, warning that "capabilities are accelerating faster than risk-management practice, and the gap between firms is widening." KPMG's 2025 findings illuminate a "trust paradox" that captures the current moment perfectly: 70 percent of US workers are eager to realize AI's benefits, 75 percent maintain appropriate caution about potential downsides, yet fewer than half express willingness to trust AI systems.

The quest for complete AI trust and control confronts a sobering reality. This may represent a fundamentally unsolvable technical problem, one far more difficult than advancing AI capabilities themselves. Unlike AI performance metrics that improve relatively predictably with better algorithms, AI's ability to process new situations predictably may be impossible to fully ensure due to the opaque nature of neural networks, suggesting there is always residual risk to manage rather than eliminate. How then can we trust a technology that inherently cannot be fully trusted? Military AI practitioners will recognize this dilemma immediately. It's the central challenge they've grappled with from the outset. While private industry has mainly focused on maximizing AI's raw computational power, treating AI safety as a secondary concern for the past few years, both civilian and military sectors now find themselves converging on the same problem set.

Looking ahead, the military will have a key role in how AI reliability is built, proven, and bought. For startups and VC investors betting billions on the future of AI, the military's endeavor on this issue will increasingly provide lessons learned and opportunities applicable to the broader AI ecosystem. And so far, this endeavor has proven far from straightforward.

The Rise of AI in Military Operations

Like the private sector, the Department of Defense and its allies have decisively moved beyond experimental pilots, integrating AI into core operations at an unprecedented pace. Battlefield imperatives and substantial investments drive this shift. From 2022 to 2023, the potential value of federal AI contracts soared by more than 1,200 percent, primarily fueled by heightened DoD investment. The Pentagon itself has committed significant resources, requesting $1.8 billion for AI and machine learning in 2025, and recently awarded contracts of up to $200 million each to Anthropic, Google, OpenAI, and xAI for advanced AI development.

The DoD frames AI as a core enabler of decision advantage. Yet this pursuit of decision advantage reveals a stark paradox: while AI promises to reduce uncertainty and accelerate decision-making, it also introduces new unpredictability through unexpected behaviors and catastrophic failures in operational conditions. Indeed, following Zach Hughes's argument, AI might even increase the "fog of war" and introduce a "fog of systems." Field reports from Ukraine have criticized Anduril's AI-powered autonomous Ghost drones as underperforming in contested electronic warfare environments such as jamming, spoofing, and rapid adversary adaptation when compared with demonstration conditions. This forces commanders to choose between using a system that is prone to failing in real-time or abandoning assistance precisely when it is most needed.

This frontline example in Ukraine is not an exception. This reality partly explains why AI adoption in defense still favors low-risk areas like predictive maintenance, while high-stakes autonomy exposes unresolved issues that Silicon Valley has yet to confront, turning AI innovations into liabilities when lives are at stake.

Figure Note: Methodology: Analysis based on a comprehensive dataset of defense and military AI companies in the US and allied nations from PitchBook, supplemented with proprietary research. Companies classified as "Autonomous Systems" based on product descriptions, domain focus, and AI value chain positioning using keyword analysis for autonomous, drone, UAV, unmanned, robotics, and related terms. Autonomous systems are defined as AI technologies capable of making decisions independently and taking action without direct human intervention or with human-in-the-loop, representing the most advanced form of artificial intelligence deployment in defense applications. Data includes founding dates, financing status, domain specialization, and technology focus areas. Sample filtered to companies founded 2010-2023 with valid founding year data (n=1,284).

Figure Note: Methodology: Analysis based on a comprehensive dataset of defense and military AI companies in the US and allied nations from PitchBook, supplemented with proprietary research. Companies classified as "Autonomous Systems" based on product descriptions, domain focus, and AI value chain positioning using keyword analysis for autonomous, drone, UAV, unmanned, robotics, and related terms. Autonomous systems are defined as AI technologies capable of making decisions independently and taking action without direct human intervention or with human-in-the-loop, representing the most advanced form of artificial intelligence deployment in defense applications. Data includes founding dates, financing status, domain specialization, and technology focus areas. Sample filtered to companies founded 2010-2023 with valid founding year data (n=1,284).

Why AI Trust Is Especially Elusive in Defense

The defense domain faces three compounding issues in the field that make establishing trustworthy AI more challenging than in most commercial settings: edge and data constraints, adversarial threats, and the human-machine trust gap in life-and-death contexts.

Data Constraints. Battlefield AI faces amplified brittleness due to extreme edge and data constraints. In a highly complex battlefield where ground truth is unpredictable and changing constantly, the input data may be like nothing ever seen in training data, risking a model to “fail miserably” when asked to do something different. For example, the US Air Force observed a targeting AI that, while boasting 90 percent accuracy in controlled conditions, performed at just 25 percent in live operations, stymied by unfamiliar data types and viewpoints. Further, the DARPA Air Combat Evolution program exposed paradoxical brittleness: AI pilots performed worse against simple, pre-planned cruise-missile profiles than against adaptive fighters, likely because early missile wins shifted training toward more complex fighter threats. This issue is magnified by the battlefield's denied, degraded, intermittent, or low-bandwidth environment– a challenge for machine learning operations that traditionally assume stable networks and ample compute. Not only does the volume of data generated at the edge often overwhelm the volume that can be transferred over the rate-constrained links, but essential data generated from advanced sensors is often not captured for future AI training.

Adversarial Threats. Second, AI brittleness can be exploited by intelligent adversaries. Researchers have shown that simple adversarial patches—colorful decals affixed to equipment—can cause neural-network vision systems to misclassify or fail to detect objects. Drawing on battlefield lessons from Ukraine, researchers have engineered anti-AI camouflage that uses NATO-style camo-netting hues rather than garish patches, yet still suppresses detection, depresses classifier confidence, or floods detectors with low-confidence false alarms, deceiving both human scouts and AI-enabled ISR. Moreover, attackers can poison training data by inserting distinctive markers and backdoors. For instance, a small number of carefully fabricated samples added to an antivirus training set could cause it to misclassify any sample with a specific digital watermark as benign, allowing malware to slip past defenses undetected. Equally problematic, updating systems with adversarial patches can backfire, with research indicating hardening against a specific attack vector sometimes increases vulnerability to others.

Human-Machine Trust Gap. Third, trust hinges critically on human perceptions of AI reliability. Even when an AI delivers flawless and at times "unpredictably brilliant” results, trust remains elusive because humans tend to withhold confidence in systems whose decision-making processes are opaque, regardless of outcome. Moreover, human perceptions are fragile and complex to repair when AI makes a mistake, even when machine reasoning is transparent. Army-led research in 2019 on human-agent teaming produced among the first studies exploring these human-robot trust dynamics, demonstrating that regardless of a robot's transparency in explaining its logic, participants who witnessed even a single error rated its reliability and trustworthiness lower and maintained this diminished confidence even when the system made no subsequent mistakes. These consequences manifest starkly in national security contexts: a survey of US experts revealed that 58 percent "very much" or "completely" trusted human-analyzed intelligence, compared to merely 24 percent for AI-provided intelligence.

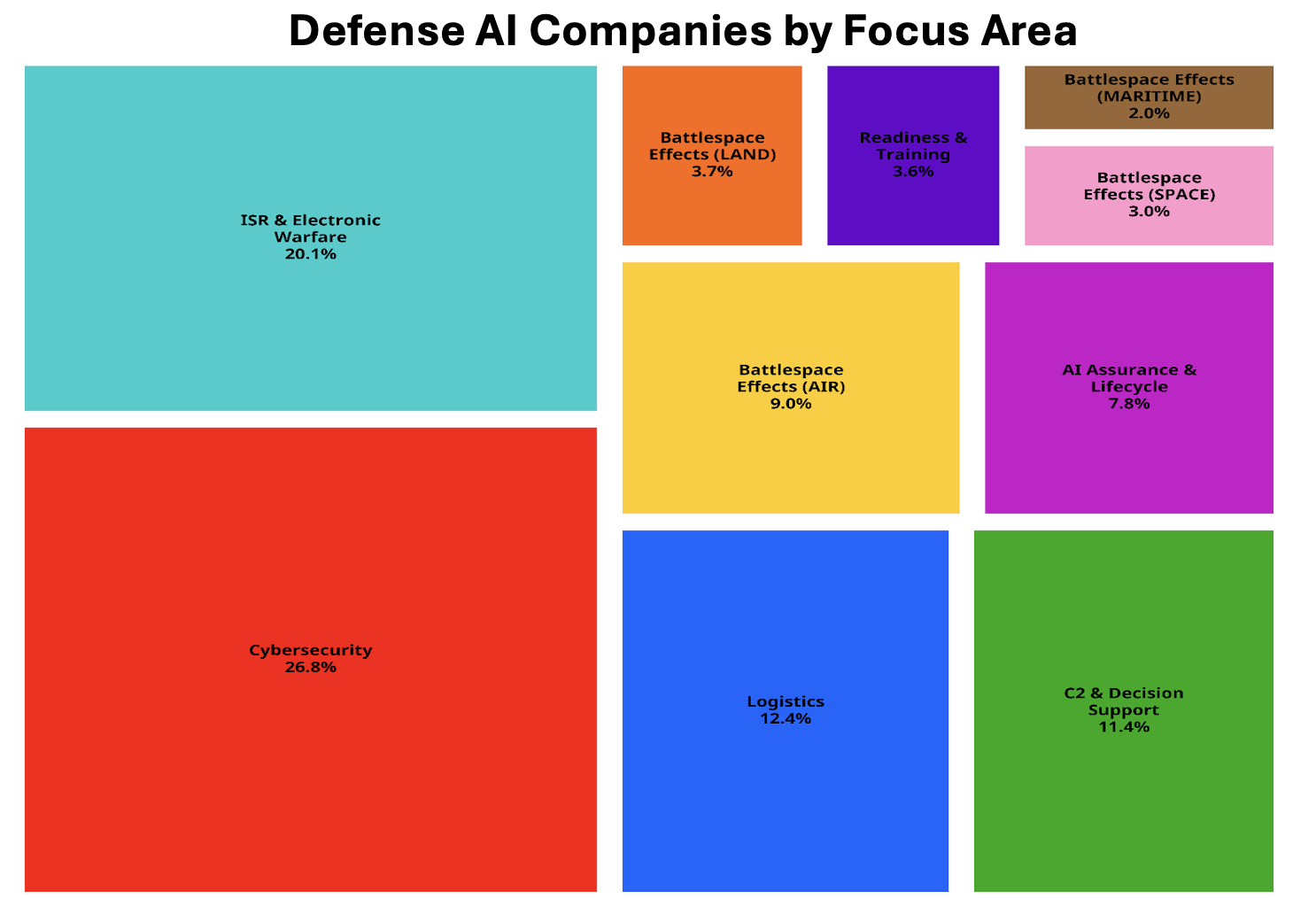

Figure Note: Using Pitchbook, company website data, and supplemented with proprietary research, the classification process organized companies from previous figure into these primary focus areas based on their products and services offered: Cybersecurity encompasses companies focused on AI for securing networks, endpoints, models, and communications from digital threats; ISR & Electronic Warfare includes companies developing AI for intelligence, surveillance, reconnaissance, and electronic warfare capabilities; Logistics covers companies applying AI to maintain systems, optimize logistics, and fortify supply chains and infrastructure; C2 & Decision Support represents companies creating AI that supports command-level planning, analysis, and decision-making; Battlespace Effects includes companies developing AI for contested environment operations, categorized by domain (AIR, LAND, SPACE, MARITIME); AI Assurance & Lifecycle encompasses companies building AI tools for testing, monitoring, and optimizing trustworthiness of deployed AI systems; Readiness & Training covers companies using AI for simulation, teaching, and optimizing warfighter preparedness.

Figure Note: Using Pitchbook, company website data, and supplemented with proprietary research, the classification process organized companies from previous figure into these primary focus areas based on their products and services offered: Cybersecurity encompasses companies focused on AI for securing networks, endpoints, models, and communications from digital threats; ISR & Electronic Warfare includes companies developing AI for intelligence, surveillance, reconnaissance, and electronic warfare capabilities; Logistics covers companies applying AI to maintain systems, optimize logistics, and fortify supply chains and infrastructure; C2 & Decision Support represents companies creating AI that supports command-level planning, analysis, and decision-making; Battlespace Effects includes companies developing AI for contested environment operations, categorized by domain (AIR, LAND, SPACE, MARITIME); AI Assurance & Lifecycle encompasses companies building AI tools for testing, monitoring, and optimizing trustworthiness of deployed AI systems; Readiness & Training covers companies using AI for simulation, teaching, and optimizing warfighter preparedness.

Trust Is an Issue to Manage, Not Resolve

Trust is not a static benchmark like typical AI performance metrics, nor is there a silver bullet solution. In fact, the three constraints above may never be fully resolved, and that's not a bug; it's a feature of how neural networks fundamentally operate. Instead, our ability to foster AI reliability and trust its outputs is a dynamic relationship that co-evolves with threats and changing environments.

The DoD’s Chief Digital and Artificial Intelligence Office recognizes this challenge: "Trust is task- and context-dependent, and it will evolve, so it must be measured accordingly. A single measure of holistic system trust is insufficient." This integrated approach is already reshaping how military AI programs operate. The lessons emerging from these early implementations and solutions emerging from startups hold implications for civilian AI deployment.

Bridging the Data Gap: Domain-Specific, Integrated Solutions. Achieving effective and reliable battlefield AI increasingly depends on integrated, domain-specific solutions tailored to the realities of military operations. The Army's experience with Griffin Analytics demonstrates how integrated approaches amplify specialized AI components while maintaining operational flexibility. The Army's AI2C modular development process, combined with West Point's model-quality simulation frameworks, enables deployment of narrowly scoped AI models while maintaining end-to-end visibility across the entire AI lifecycle. Without this integrated approach, determining required predictive accuracy levels could remain unclear — a gap addressed through collaborative frameworks linking development, operations, and performance assessment. EdgeRunner AI exemplifies this approach, building models "from the ground up with datasets crafted to be culturally aware to the American warfighter," customizing further by service branch. This represents exactly the type of narrowly scoped, specialized AI components that prove most effective in military applications.

Bridging the Adversarial Frontier: Red Teaming and Continuous Adaptation. The fusion of AI red teaming and offensive cyber operations creates continuous feedback loops that build resilient systems where operational data is scarce. Insights from defensive red teaming, such as DARPA's SABER program simulating data poisoning and evasion attacks, can inform offensive tactics. At the same time, intelligence from cyber operations refines scenarios to harden AI against unknown unknowns. CalypsoAI's Inference Red-Team platform tests for vulnerabilities like prompt injection, aligning with SABER through features like Signature Attack Packs and contributing to an aggregated knowledge base via defense partnerships. This holistic integration reconciles modular specialization with unified interoperability, enhancing military AI trust in adversarial environments.

Bridging the Trust Divide: Human-Centered AI Reliability. Building truly reliable military AI requires more than technical advances; it demands a continuous process of integrating human expertise and feedback at every stage of development and deployment. One promising approach links AI outputs with model performance and vulnerabilities to provide enhanced situational awareness. Continuous human input, such as reports of model errors, edge-case observations, and real-world feedback from commanders, can be systematically incorporated into model retraining and validation cycles, directly enhancing AI reliability and closing gaps missed during initial development, as suggested by a Lawfare report. However, such measures require full-stack model monitoring platforms that connect AI model brittleness solutions with human-machine trust interfaces. Companies like Arize are incorporating the human element into explainable AI through observability platforms that integrate hybrid human feedback with LLM-as-a-Judge evaluations and automated metrics.

Dual-Use Implications and Future Outlook

The military's pursuit of trustworthy AI amid the fog of war addresses defense imperatives and acts as a crucible for dual-use innovation. By confronting spoofing, poisoning, and environmental uncertainty head-on, defense programs are forging frameworks — integrated red-teaming, continuous model monitoring, and human-AI feedback loops — that can migrate beyond the battlefield.

The healthcare parallels are instructive, for example. Diagnostic tools face analogous risks from evolving pathogens that quickly adapt to new treatments, much like adversaries spoofing military systems. Lessons from DoD initiatives like DARPA's SABER and related red-teaming startups could inform adaptive strategies to counter "natural adversarial examples" in diseases. Regarding AI brittleness, one study showed that adding 8 percent of erroneous data to an AI system for drug dosage caused a 75 percent change in the dosages of half of the patients relying on the system, paralleling the fragility in the Air Force study mentioned earlier. Military investments in assurance can mitigate emerging threats in non-combat arenas, while innovations in other high-stakes domains feed back into defense, expanding the dual-use opportunity set.

As AI permeates ever-higher-stakes environments, the military's experiences offer invaluable blueprints for startups and VC investors navigating the trust paradox. By treating AI assurance as an ongoing, co-evolutionary process rather than a solved equation, the defense sector paves the way for scalable solutions that close the gap between capabilities and confidence.

Author's Note — Very special thanks to my Squadra mentors, founders and subject matter experts who kindly lent me their time and expertise: Isaac Carp, Nav Vishwanathan, Dan Madden, Margaret Roth, Logan Havern, John Gill, and Matt Burtell. Your help was invaluable.

Comments